반응형

Docker compose를 이용하여 Kafka Confluent 설치

1. Docker Engine 설치 (URL : https://docs.docker.com/install/)

2. Docker compose 설치 (URL : https://docs.docker.com/compose/install/)

3. full-stack.yml 작성

개발기에서 운영하므로 Kafka Broker는 Single Broker로 설정한다.

그리고 Kafka를 관리하기 위해 kafka-manager를 추가하였다.

version: '2.1'

services:

zoo1:

image: zookeeper:3.4.9

restart: on-failure

hostname: zoo1

ports:

- "2181:2181"

environment:

ZOO_MY_ID: 1

ZOO_PORT: 2181

ZOO_SERVERS: server.1=zoo1:2888:3888

volumes:

- ./full-stack/zoo1/data:/data

- ./full-stack/zoo1/datalog:/datalog

kafka1:

image: confluentinc/cp-kafka:5.2.2

hostname: kafka1

restart: on-failure

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_LISTENERS: LISTENER_DOCKER_INTERNAL://kafka1:19092,LISTENER_DOCKER_EXTERNAL://${DOCKER_HOST_IP:-127.0.0.1}:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LISTENER_DOCKER_INTERNAL:PLAINTEXT,LISTENER_DOCKER_EXTERNAL:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: LISTENER_DOCKER_INTERNAL

KAFKA_ZOOKEEPER_CONNECT: "zoo1:2181"

KAFKA_BROKER_ID: 1

KAFKA_LOG4J_LOGGERS: "kafka.controller=INFO,kafka.producer.async.DefaultEventHandler=INFO,state.change.logger=INFO"

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

JMX_PORT: 8999

KAFKA_DELETE_TOPIC_ENABLE: "true"

volumes:

- ./full-stack/kafka1/data:/var/lib/kafka/data

depends_on:

- zoo1

kafka-schema-registry:

image: confluentinc/cp-schema-registry:5.2.2

hostname: kafka-schema-registry

restart: on-failure

ports:

- "8081:8081"

environment:

SCHEMA_REGISTRY_KAFKASTORE_BOOTSTRAP_SERVERS: PLAINTEXT://kafka1:19092

SCHEMA_REGISTRY_HOST_NAME: kafka-schema-registry

SCHEMA_REGISTRY_LISTENERS: http://0.0.0.0:8081

depends_on:

- zoo1

- kafka1

schema-registry-ui:

image: landoop/schema-registry-ui:0.9.5

hostname: kafka-schema-registry-ui

restart: on-failure

ports:

- "8001:8000"

environment:

SCHEMAREGISTRY_URL: http://kafka-schema-registry:8081/

PROXY: "true"

depends_on:

- kafka-schema-registry

kafka-rest-proxy:

image: confluentinc/cp-kafka-rest:5.2.2

hostname: kafka-rest-proxy

restart: on-failure

ports:

- "8082:8082"

environment:

KAFKA_REST_LISTENERS: http://0.0.0.0:8082/

KAFKA_REST_SCHEMA_REGISTRY_URL: http://kafka-schema-registry:8081/

KAFKA_REST_HOST_NAME: kafka-rest-proxy

KAFKA_REST_BOOTSTRAP_SERVERS: PLAINTEXT://kafka1:19092

depends_on:

- zoo1

- kafka1

- kafka-schema-registry

kafka-topics-ui:

image: landoop/kafka-topics-ui:0.9.4

hostname: kafka-topics-ui

restart: on-failure

ports:

- "8000:8000"

environment:

KAFKA_REST_PROXY_URL: "http://kafka-rest-proxy:8082/"

PROXY: "true"

depends_on:

- zoo1

- kafka1

- kafka-schema-registry

- kafka-rest-proxy

kafka-connect:

image: datamountaineer/kafka-connect-cassandra #confluentinc/cp-kafka-connect:5.2.2

hostname: kafka-connect

restart: on-failure

ports:

- "8083:8083"

environment:

CONNECT_BOOTSTRAP_SERVERS: "kafka1:19092"

CONNECT_REST_PORT: 8083

CONNECT_GROUP_ID: compose-connect-group

CONNECT_CONFIG_STORAGE_TOPIC: docker-connect-configs

CONNECT_OFFSET_STORAGE_TOPIC: docker-connect-offsets

CONNECT_STATUS_STORAGE_TOPIC: docker-connect-status

CONNECT_KEY_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_KEY_CONVERTER_SCHEMAS_ENABLE: "false"

CONNECT_KEY_CONVERTER_SCHEMA_REGISTRY_URL: 'http://kafka-schema-registry:8081'

CONNECT_VALUE_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_VALUE_CONVERTER_SCHEMAS_ENABLE: "false"

CONNECT_VALUE_CONVERTER_SCHEMA_REGISTRY_URL: 'http://kafka-schema-registry:8081'

CONNECT_INTERNAL_KEY_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_INTERNAL_KEY_CONVERTER_SCHEMAS_ENABLE: "false"

CONNECT_INTERNAL_VALUE_CONVERTER: "org.apache.kafka.connect.json.JsonConverter"

CONNECT_INTERNAL_VALUE_CONVERTER_SCHEMAS_ENABLE: "false"

CONNECT_REST_ADVERTISED_HOST_NAME: "kafka-connect"

CONNECT_LOG4J_ROOT_LOGLEVEL: "INFO"

CONNECT_LOG4J_LOGGERS: "org.apache.kafka.connect.runtime.rest=WARN,org.reflections=ERROR"

CONNECT_CONFIG_STORAGE_REPLICATION_FACTOR: "1"

CONNECT_OFFSET_STORAGE_REPLICATION_FACTOR: "1"

CONNECT_STATUS_STORAGE_REPLICATION_FACTOR: "1"

CONNECT_PLUGIN_PATH: '/usr/share/java,/etc/kafka-connect/jars'

volumes:

- ./connectors:/etc/kafka-connect/jars/

depends_on:

- zoo1

- kafka1

- kafka-schema-registry

- kafka-rest-proxy

kafka-connect-ui:

image: parrotstream/kafka-connect-ui:latest #landoop/kafka-connect-ui:latest

hostname: kafka-connect-ui

restart: on-failure

ports:

- "8003:8000"

environment:

CONNECT_URL: "http://kafka-connect:8083/"

PROXY: "true"

depends_on:

- kafka-connect

ksql-server:

image: confluentinc/cp-ksql-server:5.2.2

hostname: ksql-server

restart: on-failure

ports:

- "8088:8088"

environment:

KSQL_BOOTSTRAP_SERVERS: PLAINTEXT://kafka1:19092

KSQL_LISTENERS: http://0.0.0.0:8088/

KSQL_KSQL_SERVICE_ID: ksql-server_

depends_on:

- zoo1

- kafka1

zoonavigator-web:

image: elkozmon/zoonavigator-web:0.5.1

ports:

- "8004:8000"

restart: on-failure

environment:

API_HOST: "zoonavigator-api"

API_PORT: 9001

links:

- zoonavigator-api

depends_on:

- zoonavigator-api

zoonavigator-api:

image: elkozmon/zoonavigator-api:0.5.1

restart: on-failure

environment:

SERVER_HTTP_PORT: 9001

depends_on:

- zoo1

kafka-manager:

image: hlebalbau/kafka-manager:stable

container_name: kafka-manager

restart: on-failure

ports:

- "9000:9000"

environment:

ZK_HOSTS: zoo1:2181

APPLICATION_SECRET: "random-secret" # letmein

KM_ARGS: -Djava.net.preferIPv4Stack=true

KAFKA_MANAGER_AUTH_ENABLED: "true"

KAFKA_MANAGER_USERNAME: admin

KAFKA_MANAGER_PASSWORD: admin123

depends_on:

- kafka1

- zoo1

command: -Dpidfile.path=/dev/null4. Docker Compose를 이용하여 full-stack.yml 실행

아래 명령어를 이용하여 도커를 실행한다.

$ docker-compose -f full-stack.yml up

## background logs

$ docker-compose -f full-stack.yml up -d실행 후 kafka 관리 페이지에 접속 할 수 있다.

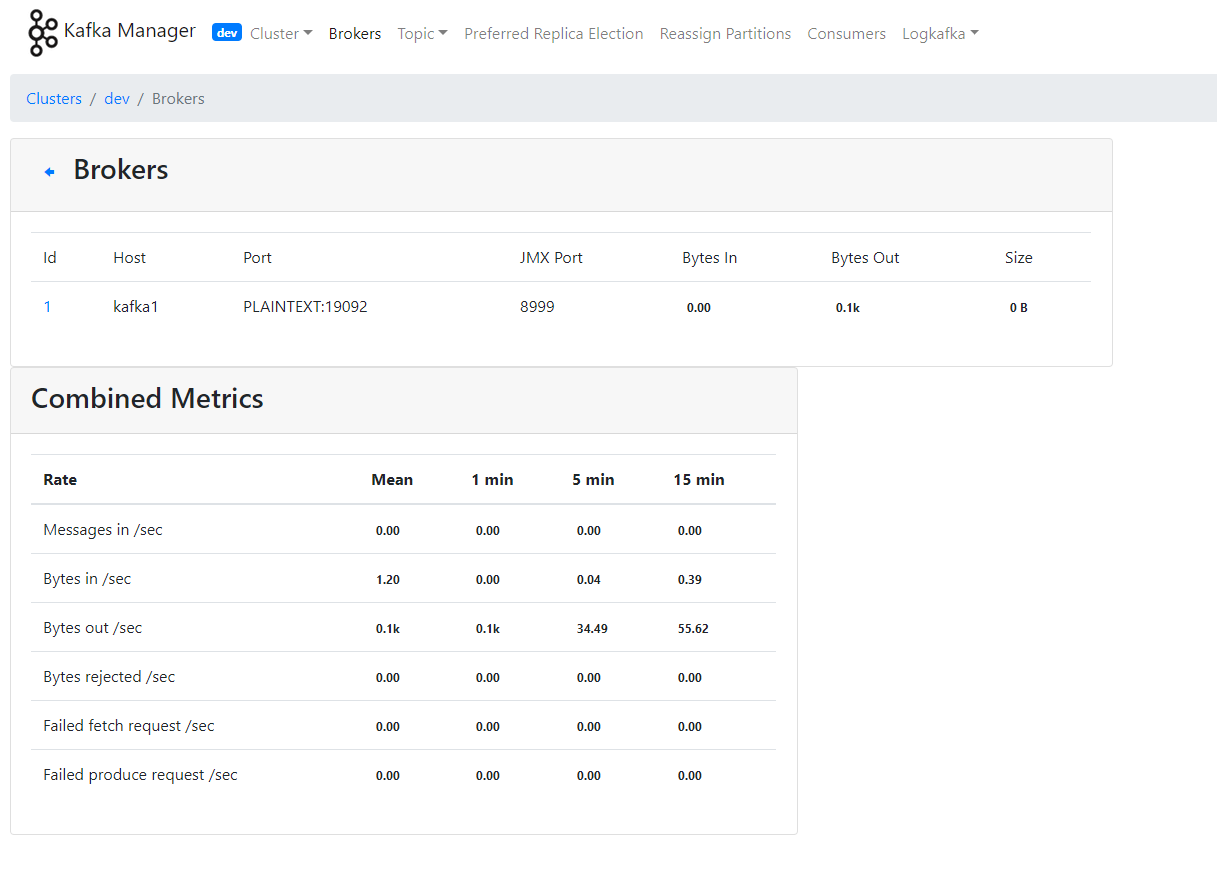

- Kafka Mananger : http://localhost:9000

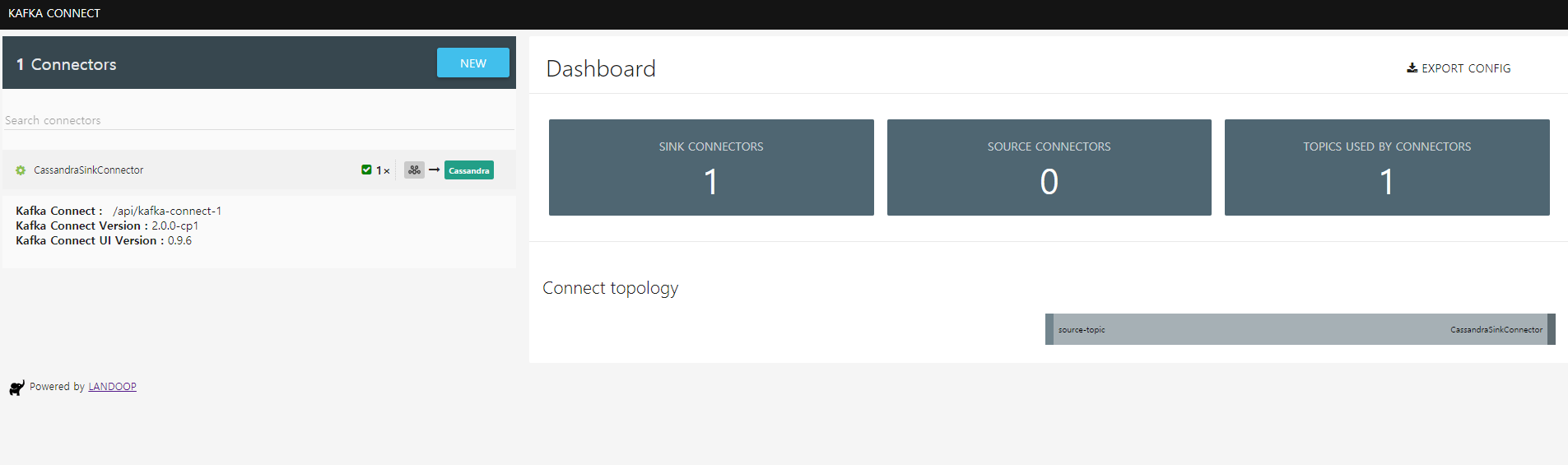

- Kafka Connect UI : http://localhost:8003

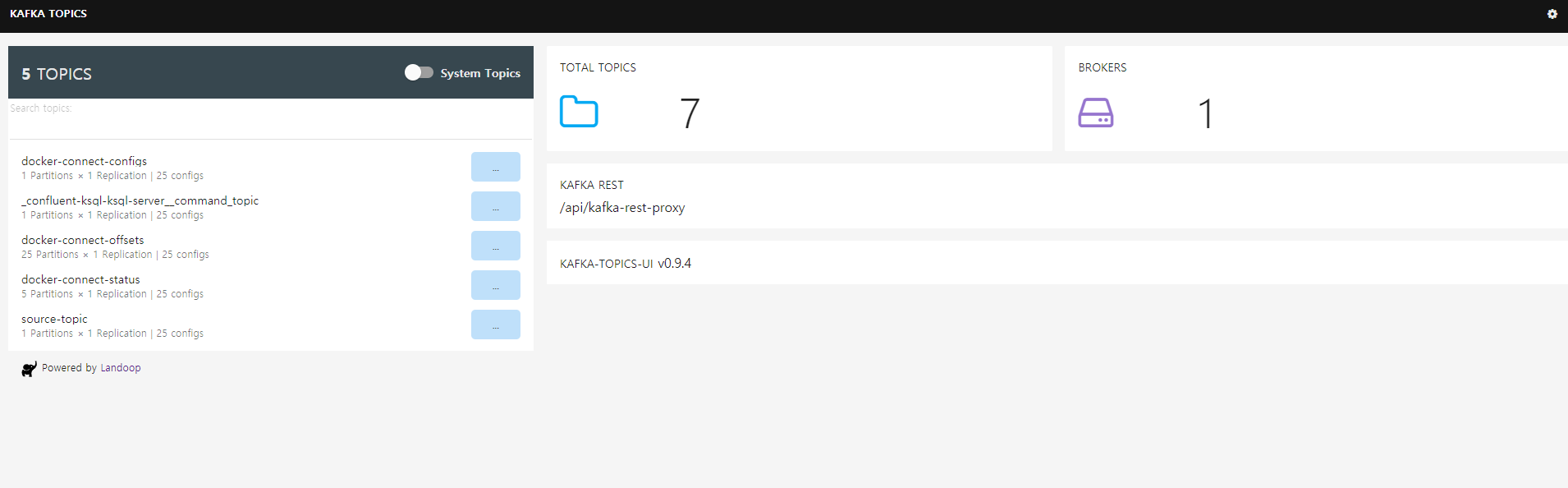

- Kafka Topics : http://localhost:8000

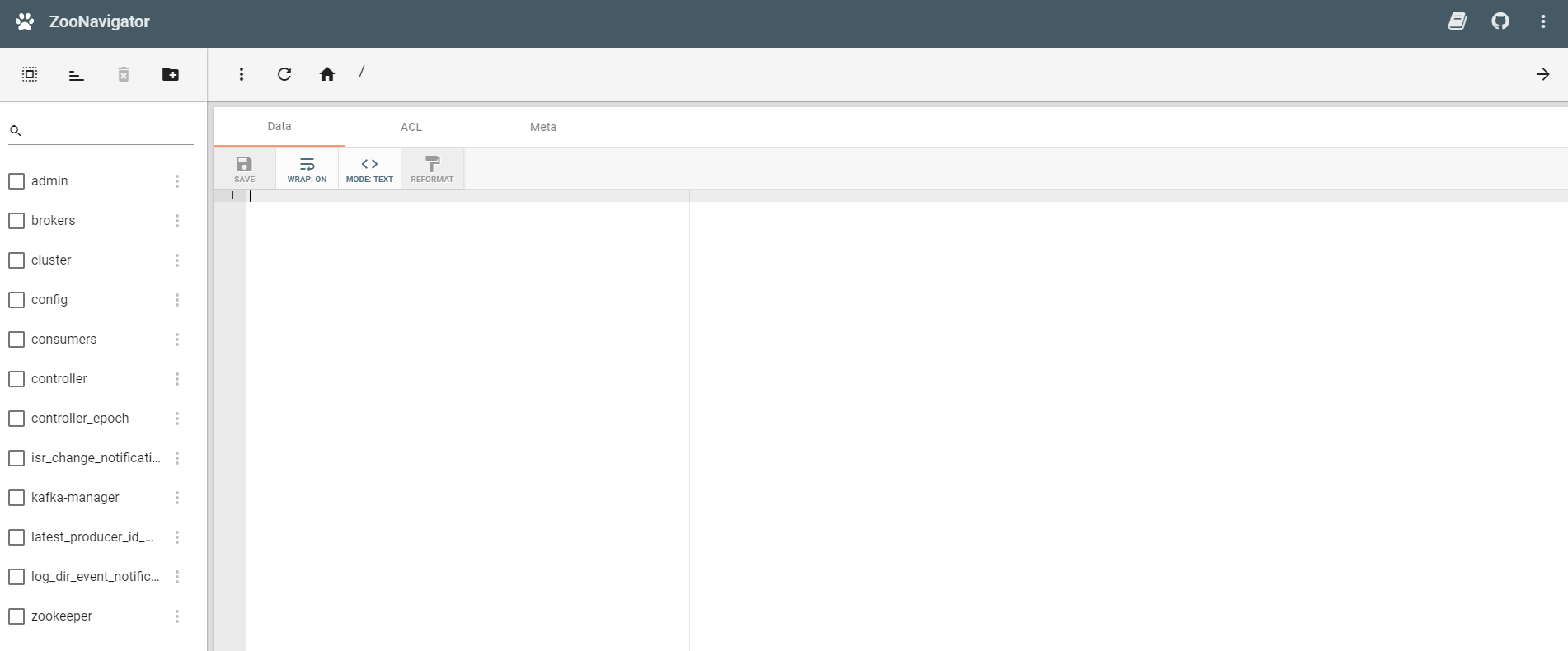

- ZooKeeper Navigator Web : http://localhost:8004

반응형

'CI-CD > Docker' 카테고리의 다른 글

| 10. Docker Compose (0) | 2023.04.19 |

|---|---|

| 9. Dockerfile (0) | 2023.04.19 |

| 7. Docker Network (0) | 2023.04.19 |

| 6. Docker container (0) | 2023.04.19 |

| 5. docker image 조작 (0) | 2023.04.19 |